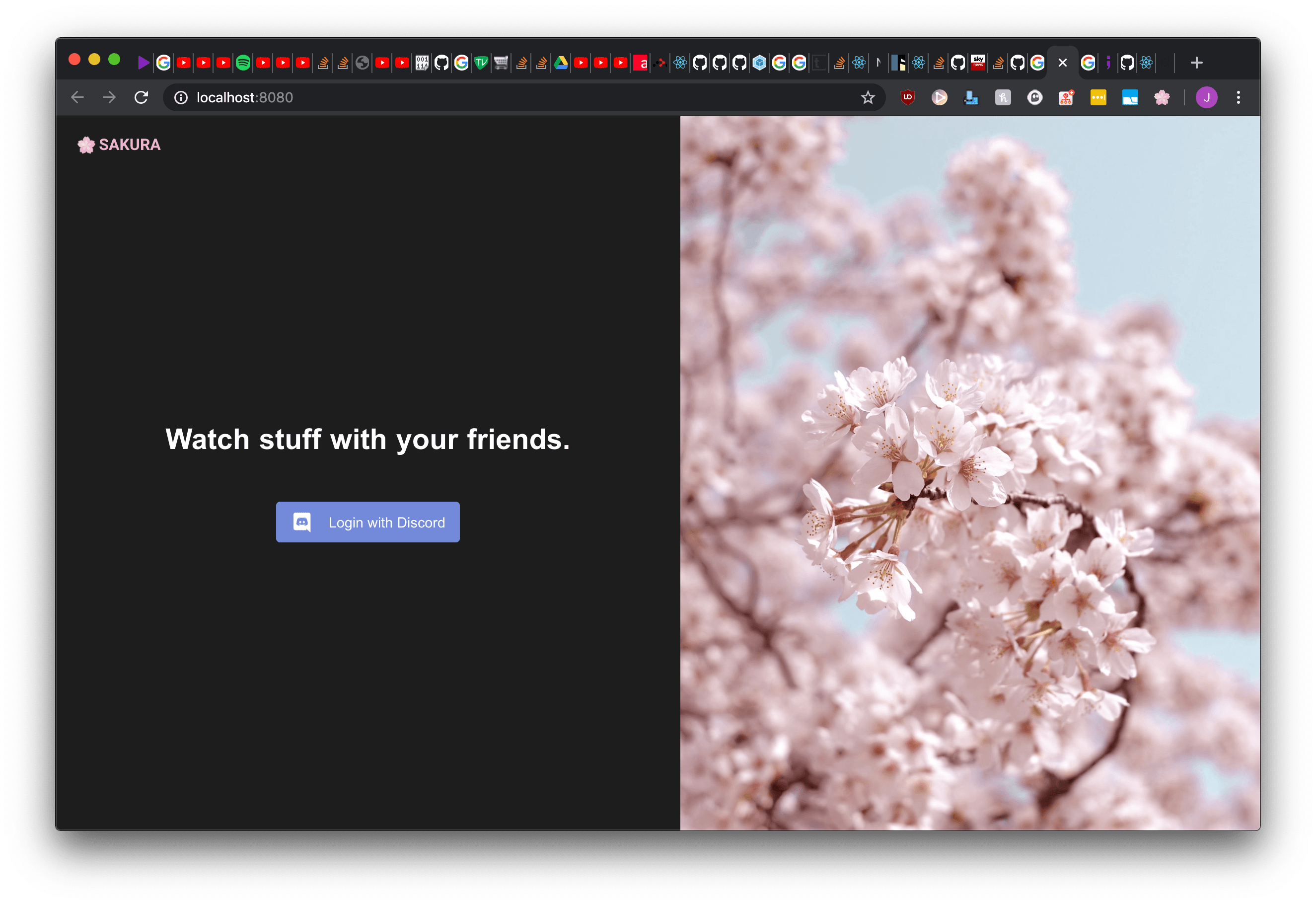

A Case Study: Sakura

Sakura is a project I’ve been working on for over 3 years, and I intend to document all the development details in this post.

NOTE: This article is far from complete, and so is the project. So far, I’ve only covered the first two out of the four existing iterations of Sakura.

What is Sakura?

Initially, Sakura was meant to introduce a low-cost method to allow you to enjoy television with your friends and loved ones who are far away, with minimum latency, and in the comfort of your own home.

There’s always the option of streaming your screen through the many existing free communication services such as Discord, but what if you have a low upload speed?

Many projects have attempted to take on this difficult problem, and of the very first was Rabbit. It was very successful in allowing users to enjoy synchronized media with their friends online, but it was quite expensive to maintain free of charge.

Basically, you would create a room, and Rabbit would create a virtual machine (VM) for you. The VM’s screen would be streamed to everyone in the room. It also allowed the host to control the VM in their browser.

Naturally, all connected users would be able to see the VM’s screen in perfect sync, because such a service had to rely on UDP, which drops missed packets and ensures you always see only the most recent data.

However this technique is rather expensive, you need to provide a virtual machine with enough resources to run a browser. I believe I had found that 256MBs of memory was barely enough for one tab. Rabbit was not able to come up with a profitable business model, so they had to close their doors.

Pink Origins

What is the best way to have two users watch the same content in sync? And how do we deal with buffering? Torrents! If everyone has the same file preloaded, we can theoretically allow them to watch the same file (or even a different one) in near-perfect sync.

The project was initially made for anime only, and it split content based on show, you were able to link a certain torrent with an anime to let the app know how to play it.

It also allowed you to search from a list of selected trackers, and it was able to tell when an anime had the same name as a torrent, even if they had different spellings! (i.e. Haikyuu! and Haikyu!!)

I put a lot of effort in this app, which was built with Electron and HTML and pure JS. It is truly astonishing to me to this day how I managed to build something so complex without a frontend framework.

I copied Netflix’s Continue Watching feature: The app kept track of your watch progress (on an episode level and a series level), it was quite pleasant to use - until I had to work on the actual video player.

Many torrents are encoded using HEVC (High Efficiency Video Coding) which is a proprietrary encoding that does not ship with Chromium by default, you have to enable it manually by building Electron/Chromium yourself.

This is not viable, I do not have 60GBs of free space lying around to build Chromium myself. I would have also needed to build it for all the platforms I planned to target, and I needed to make sure I kept updating the builds so that my clients wouldn’t be susceptible to vulnerabilities.

The Flower Starts to Bloom

By this point, I felt burned out to death. I’d put a lot of effort into this only to realize in the end that it wasn’t viable with the technlogy stack I chose. Shortly after, I learned of the existence of another project attempting to solve this project: Cryb. Cryb is an open-source project that solved the problem in the same way Rabbit originally did: virtual machines.

I took a look at their source code, and it piqued my interest to say the least. I viewed it as quite the technical revolution, when in truth, I was the one who was still stuck in the past. Their architecture consisted of multiple backend services that handled different tasks: a user-facing API & WebSocket server, a service that deployed VMs, etc.

Their web app was built with Nuxt & Vue 2, and this was my first time ever seriously considering a frontend framework in a project. I fell in love with this modern architecture that I had never seen before, and it reignited my desire to continue my own project & the search for a cheap and effective solution.

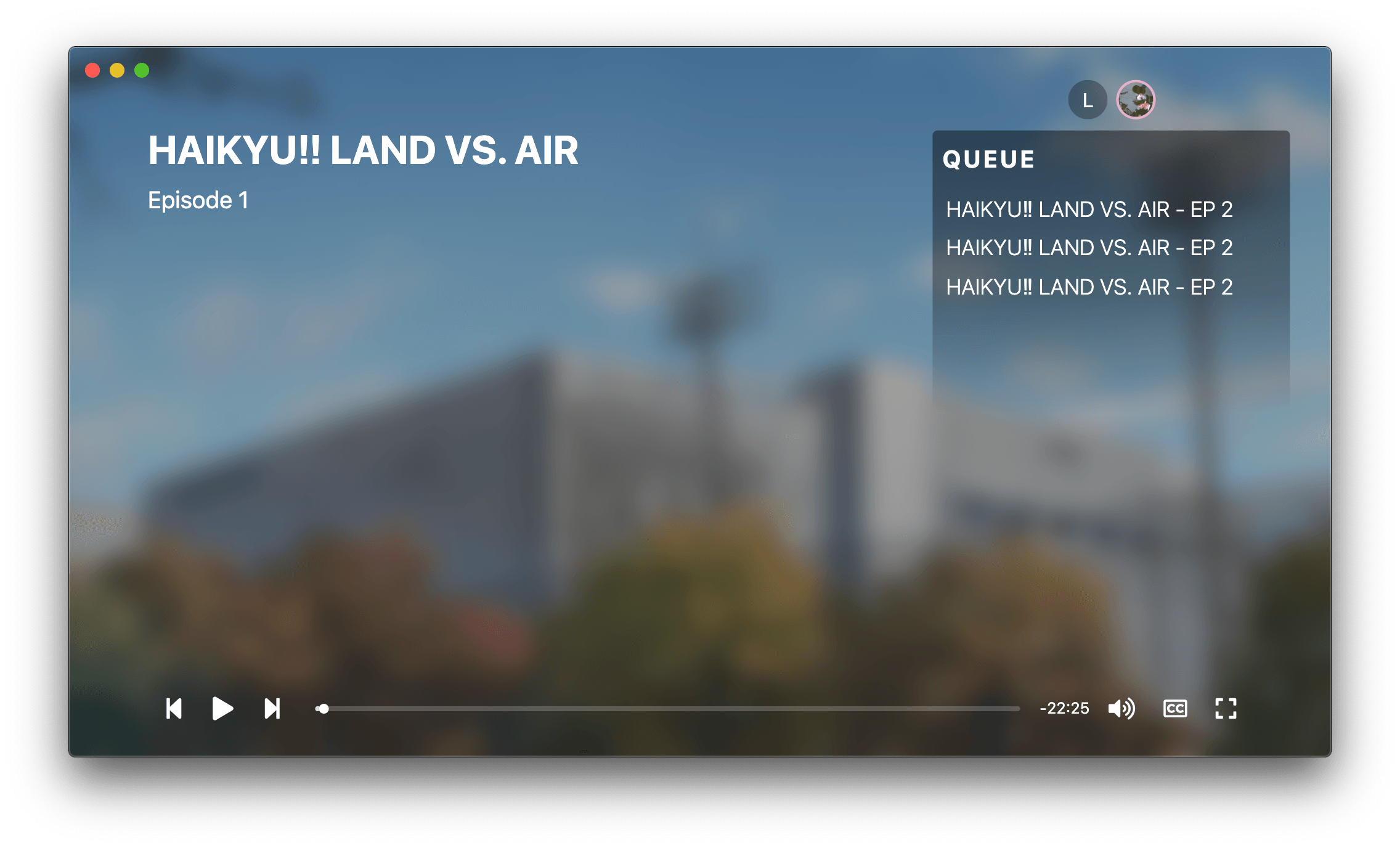

This time I wanted to do it differently, I wanted to do it like another watch together platform Metastream by Samuel Maddock, but I wanted to do it better.

Basically the way Metastream works is: You open the web app, install an extension, then the app can embed websites inside itself and it injects code to those sites to detect video players on them. It would keep the video players of every user in the room in sync. However, Metastream was somewhat rough to use. I believe it was not actively maintained anymore, and it was riddled with desync issues. It still felt like a proof-of-concept, but I knew Samuel’s fantastic idea could go much further.

I learned from my previous attempt, so first, I wanted to build the extension as that was the fragile part that decided if this approach would work or not.

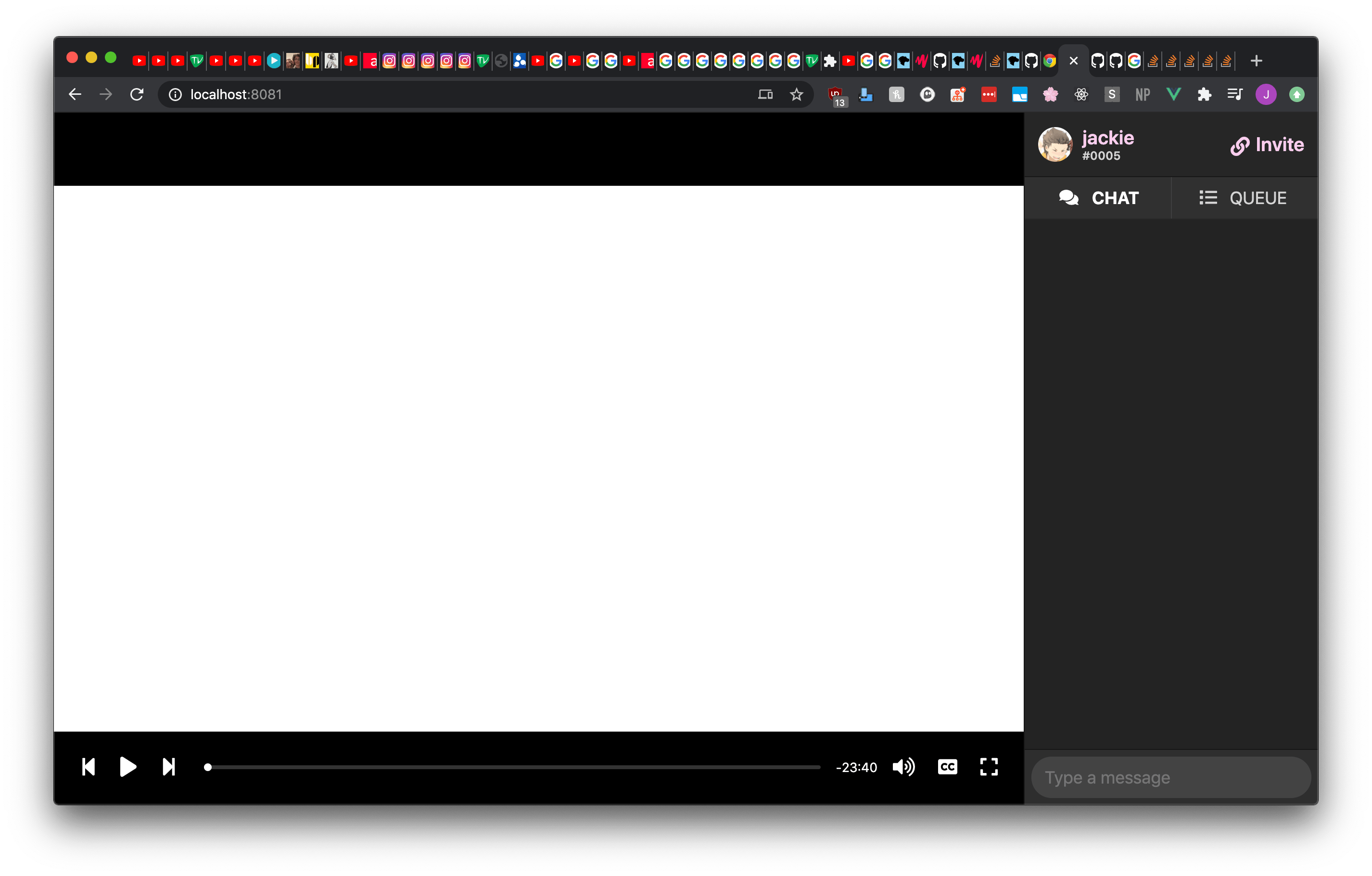

I wrote a barebones browser extension with TypeScript

I started my work by building on top of the synchronization, I included timestamps in every request and calculated the latency to make sure the video player was always at the right time. I also made sure the player could not be paused or played by accident. The state of your local video player was always kept identical to what was expected, considering all the gimmicks of managing a third-party video player across browser frames.

I ran periodic checks to see if there was a minimum desync of around 300ms to ensure the video player did not go out of sync for whatever reason. If I ensured the sync beyond this value, it would become an unsmooth experience, getting synced every time you drop 50 ms behind.

There is one thing I never did get around to implementing though, browsers do not play a video immediately when you ask it to, there’s a bit of a delay, so I would like to calculate an average of that delay and use it in my calculations in the future.

No matter what I did, I could not beat that 300ms of desync, and was unable to achieve a pixel perfect sync. There’s also the issue of what happens when a user is buffering. So, I decided to tackle the Rabbit method.

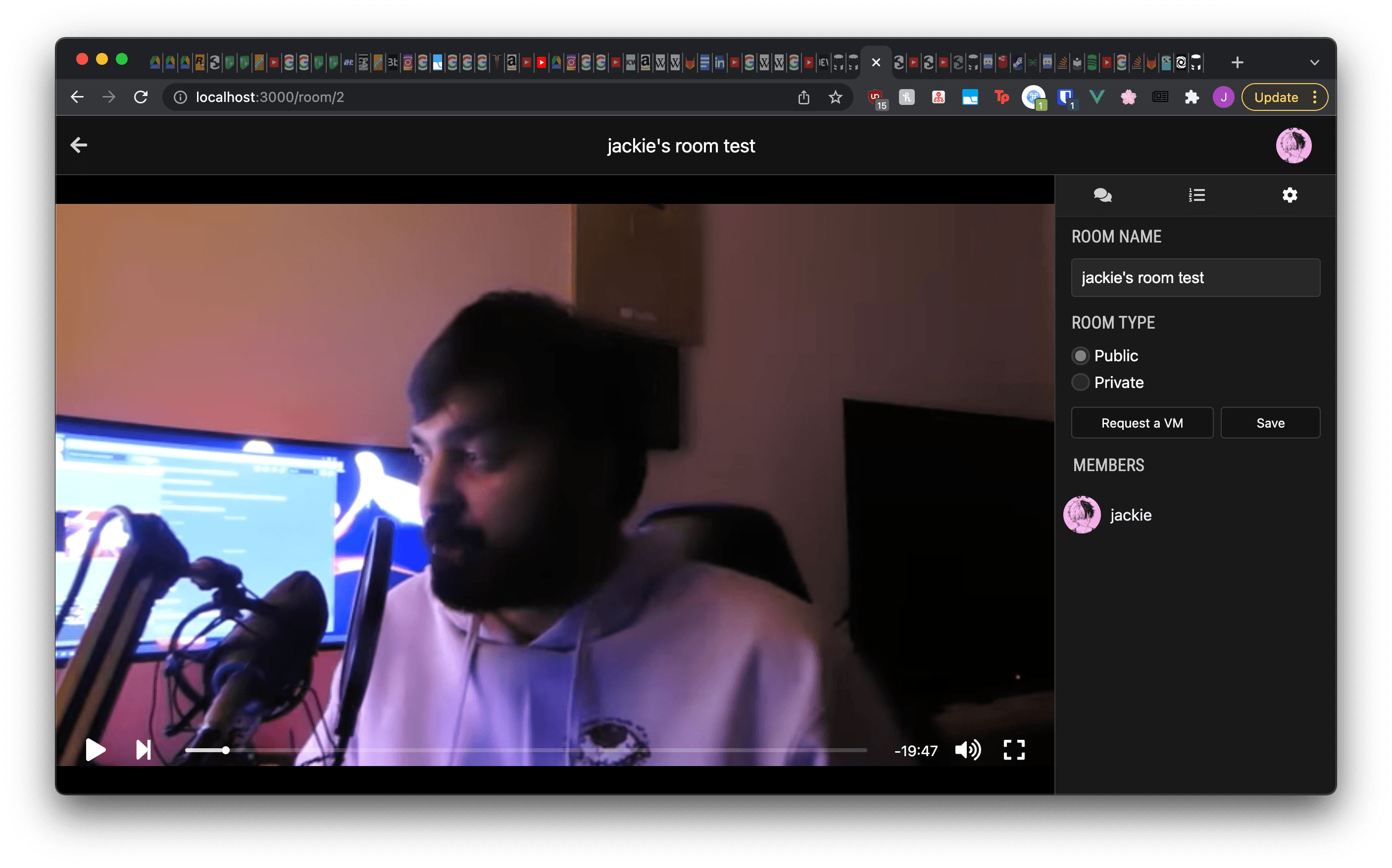

I recreated the frontend with pure Vue (without Nuxt) and the backed in TypeScript (originally in JavaScript), then I was left with the elephant in the room, virtual machines.

I built a “master server” in TypeScript that was in charge of deploying VMs, and I allowed communication between it and the API through a simple WebSocket interface.

The master server employed Kubernetes to deploy VMs, it created pods hosting an Alpine Linux docker image with Firefox preinstalled.

I created a streaming gateway (Chakra) using Golang, what it would do is receive requests from VMs to create a streams, and create corresponding streams for them. The communication between VMs and Chakra used a simple REST API.

The way it worked was: it setup an RTP server for each stream that would receive video and audio data, and when a viewer wanted to connect, it would create a new WebRTC peer that forwarded the RTP data to the viewer.

A room would utilize ffmpeg to create a video stream, it also ran a simple Node.js WebSocket server that allowed the VM to be remotely controlled using the fantastic Linux tool xdotool.